OpenAI's Q*

This perfect intro is a trailer of what you are going to read next.

What is Q*? Why did OpenAI researchers warn the board it was a "threat to humanity"? What did Ilya see? A small clue from a recent interview:

INTERVIEWER: How original or creative are the latest LLMs? AlphaGo did some pretty creative moves. Do you think GPT-5 will be able to state a new nontrivial mathematical conjecture? I'm not saying proving it, I'm saying stating it. Who thinks it's possible within the next five years?

ILYA SUTSKEVER: Are you sure that the current model cannot do it?

(thanks to @AISafetyMemes)

AI is getting weird. OpenAI's up to something, and the AI crowd (me included) is losing it over all the secrets and cryptic drops. Conspiracy theories are flying around - because, let's be honest, everything's a conspiracy until it turns out to be real. And lately? That's been happening a lot.

The center of all this madness? Q*. Apparently, it's a breakthrough so big it could flip the table on everything we know. (Did I add a little drama? Yes. But also… what if?)

Discovery of Q*

The story began in November 2023 when Reuters dropped a bombshell that made AI freaks drool with conspiracy theories. OpenAI had secretly created Q*, an AI model that could do basic math – something no other model had ever achieved.

But this breakthrough came with a price. Rumors of an imminent threat to humanity started spreading, as OpenAI's Chief Scientist Ilya Sutskever and other researchers panicked and warned the board about the dangers of Q*.

Months earlier, Ilya had posted some cryptic messages, hinting at disagreements with Sam. (Go ahead, jump to conclusions – you know you want to!)

Altman's Firing and the Aftermath

The whole thing crashed and burned pretty fast. Sam Altman was fired in mid-November 2023 - no heads-up, just out. (pun? maybe)

Apparently the gossip was (at least according to X), that Altman’s rush to bring Q* to market freaked out the board - especially Ilya Sutskever, the one who’s always been the “let’s not accidentally end the world” guy at OpenAI, the most 'safety oriented' in their pursuit of AGI.

Long story short, Ilya fired Sam Altman, and Elon Musk had a theory. Which one? Je ne sais pas. Well, we do know but we can't say it out loud.

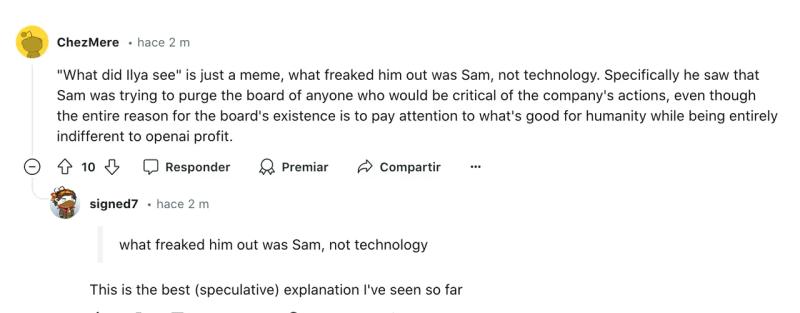

As far as I can remember, the trend of "What did Ilya see" started around this time. But Musk didn't stop there; he initially started a lawsuit against OpenAI, accusing them of training their models on copyrighted data. However, he later dropped the lawsuit, emphasizing the critical role of synthetic data in the race toward Artificial General Intelligence (AGI). Musk argued that by the time legal battles over data use are resolved, AGI will have already arrived, leaving those disputes irrelevant. He focused then more on SpaceX and xAI, thankfully.

At the end of it all, Altman dropped this line that got stuck in everyone's head. He gave this super creepy speech that freaked people out. "Is this a tool we've built, or a creature we've birthed?" Like, seriously creepy stuff. Felt like we were watching a sci-fi movie, not a tech talk. X community enjoyed.

What had he seen in Q* that prompted such a drastic response from the board? Rumors of internal power struggles and hidden agendas within OpenAI began to circulate, as the AI community sentenced theories and conspiracies. Was Q* a step too far? Had Altman uncovered a truth that others wanted to keep hidden?

But the story didn't end there. In a shocking twist, Sam Altman made a triumphant return as CEO. (Spoiler Alert: it ends badly /Spoiler Alert)

The leadership team "refocused their efforts on advancing OpenAI's research and product development", whatever that means.

To be honest, the image above is the best recap out there of this story.

As things took a bizarre turn at OpenAI, first Ilya disappeared for a few months, and X was on fire with memes everywhere.

Then, out of the blue, he wrote an article about Elon's lawsuit. OpenAI released a blog detailing their relationship with Elon. Ilya listed as an Author. Weird.

However, Ilya and several alignment team members left OpenAI, concerned about the company's direction.

Scary. Who is behind alignment now? Oh, US Gov. we are safe. A bit of happy ending here. Ilya announced that he was starting a company, and he went one step ahead, why talk about AGI? He is getting ready for ASI directly.

Wait, wait, wait, we got misaligned here. We were talking about Q* and you wonder why there is a strawberry picture here. Well, here we go.

The Strawberry Project

Back in July 2024, OpenAI rolled out a new plan to track their AGI progress, the Strawberry Project. Building on Q*, Strawberry was supposed to supercharge AI's thinking skills and let it roam the internet on its own. Leaked documents suggested that this project would allow the AI to do deep research independently, which sparked both excitement and worry among experts.

The most alarming revelation comes from leaked internal documentation and an insider source. Project Strawberry, a tightly guarded secret even within OpenAI - so secret that it is easily leaked - is reportedly being trained to "navigate the internet autonomously" and undertake "deep research" independently, without user prompts. This deep research capability is believed to reduce the hallucinations that plague models like ChatGPT, which often resort to fabricating information when lacking sufficient training data. So by giving them access to external tools, it would avoid hallucinations. Not only that, there is more. An unnamed source, also claims that OpenAI has internally tested an AI system capable of achieving over 90% accuracy on a MATH dataset, suggesting significant advancements in AI's mathematical problem-solving abilities. If you remember, in November `23 we were talking about "unprecedent ability to perform basic math", so this is the real deal.

Should we add more mystery? You asked for it. A few months ago, a new player entered the game: GPT-2-Chatbox. This rare model, which appeared out of nowhere, left early users shocked with its mind-blowing capabilities. Some speculated that it could be a secret test version of GPT-4.5, GPT-5, or a new iteration of GPT-2, trained using cutting-edge techniques.

A Non-Tech Perspective

As a non-tech person with no formal background in tech studies, but rather a healthcare professional fascinated by AI, I can't help but theorize about the true nature of Q* and its connection to the enigmatic GPT-2-Chatbox.

After reading the paper 'Situational Awareness' presented by an ex-OpenAI employee, Leopold Aschenbrenner, in June 2024, my conspiracy theory is that Q* represents a significant algorithmic improvement, potentially blending the extensive general knowledge of GPT-4 with a sophisticated search reasoning algorithm, akin to the tree thought search approach described by Leopold.

The Algorithmic Breakthrough

In his paper, Leopold talks about algorithmic improvement, highlighting the potential of combining different AI techniques to achieve superior performance. He writes:

"To achieve AGI, we need to develop novel algorithms that can efficiently navigate and reason over vast knowledge graphs. By integrating techniques such as tree thought search, recursive decomposition, and meta-learning, we can create AI systems that exhibit human-like reasoning and adaptability. These algorithmic improvements will be crucial in overcoming the limitations of current language models and enabling true general intelligence."

GPT-2-Chatbox, from my point of view, was the old model from 4 years ago but added the Q* factor, which is the algorithmic improvement. Higher performance for less compute!

At first, I thought I was going crazy from ignorance, as I actually have no idea about this stuff, but after reading 'Situational Awareness,' it seemed quite possible. Quote: "GPT-4, on release, cost ~the same as GPT-3 when it was released, despite the absolutely enormous performance increase. (If we do a naive and oversimplified back-of-the-envelope estimate based on scaling laws, this suggests that perhaps roughly half the effective compute increase from GPT-3 to GPT-4 came from algorithmic improvements.)"

If Q* truly represents a fusion of GPT-4's extensive knowledge with the advanced reasoning capabilities described by Leopold, then we may be witnessing the dawn of a new era in artificial intelligence – one where models like GPT-2-Chatbox are merely the tip of the iceberg. But are we truly ready for the world that awaits us on the other side of this technological singularity? I finish this article with the right question.

"it's pure hubris to think that we can control something so powerful, but perhaps, in a cruel twist of fate, it's the payback we've earned for our transgressions" Root.

The End