Situational Awareness - Chapter 2 (Tech-Challenged Version)

*100% Organic Human Writing by a Tech Impostor.

*Note:

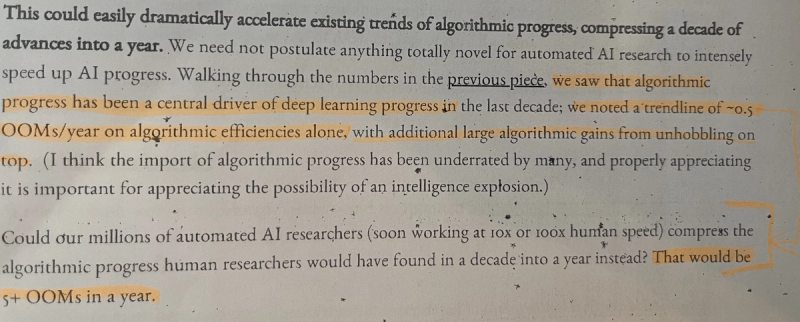

I described what OOMs are in the previous blog post. As it sounds quite snobby, I will use the term 'inflation rate' from now on - sounds better for non-techies.

In the previous chapter, we realized how quickly research is scaling in the AI ecosystem. That's good.

Is it?

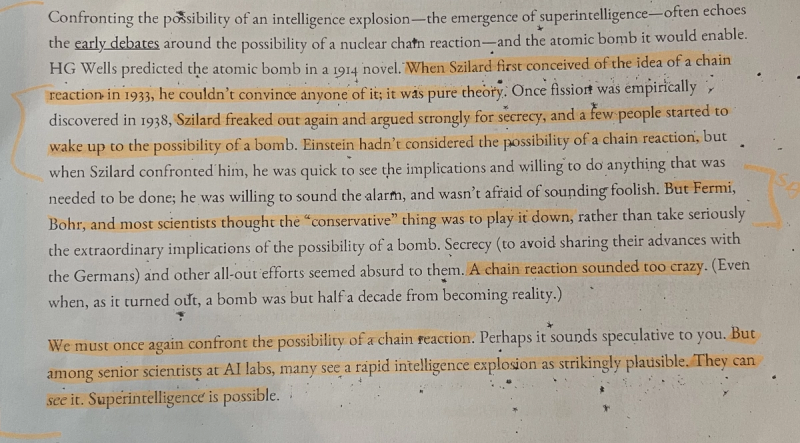

The new chapter of Situational Awareness, can be summarized in the following sentence: The A-bomb is to H-Bomb what AGI is to ASI.

Boom! what a powerful opening!

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an 'intelligence explosion,' and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make.

I. J. Good (1965)

Aschenbrenner makes a good analogy at the beginning of the chapter, comparing the evolution from Atomic bombs to hydrogen bombs, which would be directly proportional to what AGI is to ASI. A great point was made: when the H-bomb was developed - a weapon vastly more destructive than all WWII bombs combined - nuclear policies and war plans stayed stuck at the A-bomb level, completely missing the exponential leap in power and impact.

As Leopold says, "The Bomb was a more efficient bombing campaign. The Super was a country-annihilating device. So it will be with AGI and Superintelligence."

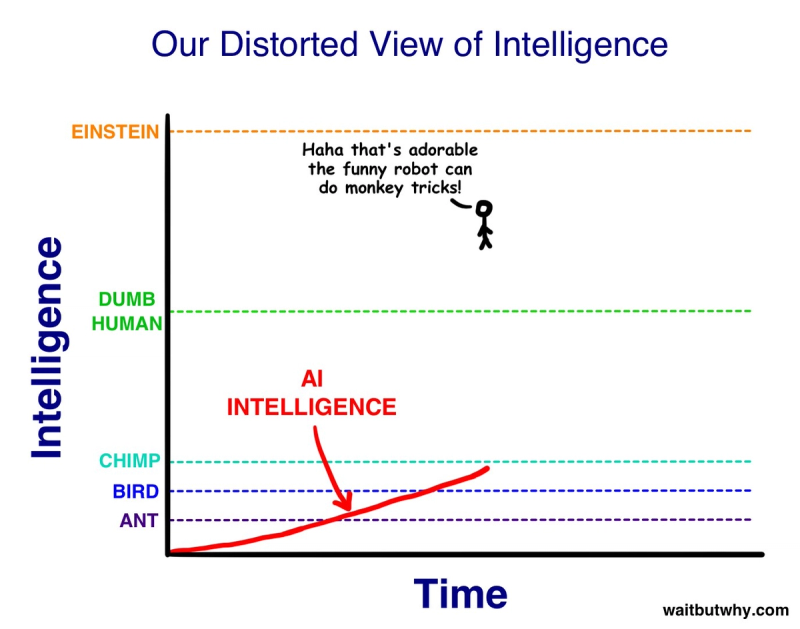

Right now, the progress of AI is at human level. We are comparing different models to preschooler or PhD level students; even so, most of the AI available today is smarter than some humans.

But do you remember the story of AlphaGo? It first learned from the best human games, then started to play against itself, quickly becoming superhuman. What does superhuman mean? AlphaGo made a move during a tournament that no human would have thought about, and that's basically also thanks to using only Reinforcement Learning during training, without any human feedback. As discussed in the previous post, human feedback would have been castrating to the vast ability of the AI - we would be limiting it with, let's add an analogy here, our "morals."

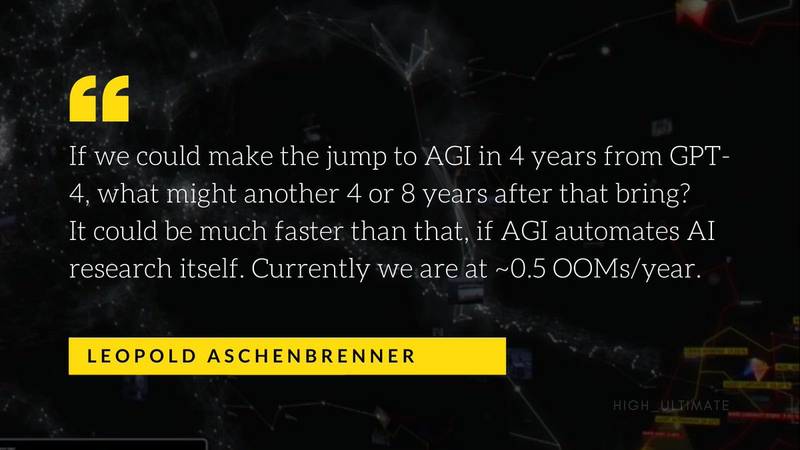

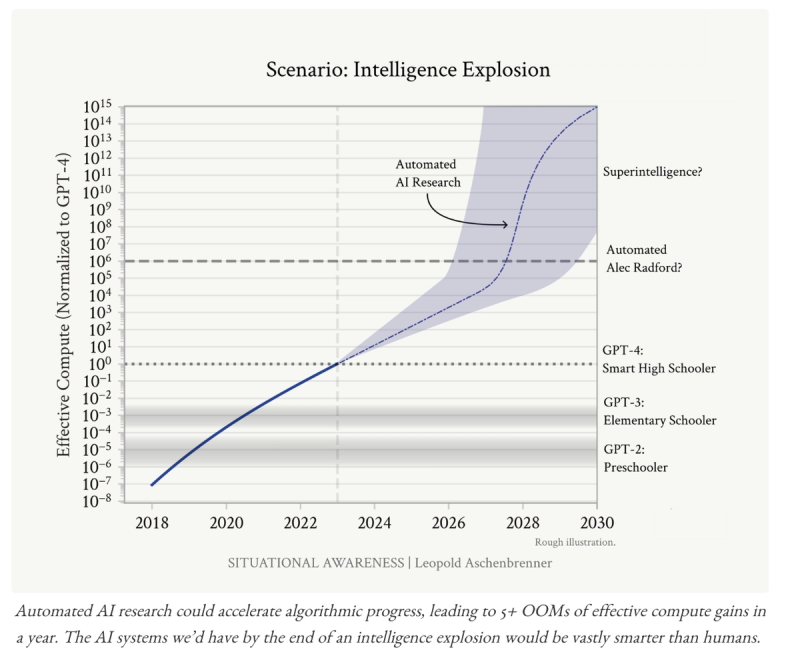

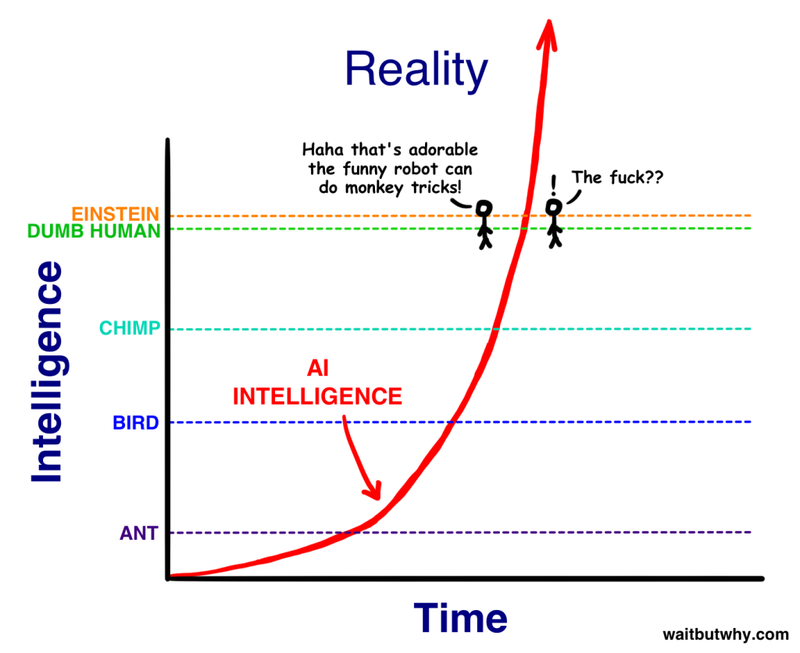

Once we achieve AGI (which has probably already been done internally), we won't have ONE AGI, we will have many millions of them. Imagine in AI labs, AGIs furiously working on automated AI research for algorithmic breakthroughs day and night, leading us to ASI sooner than what we are thinking. What would take a decade for humans would be less than a year for AI.

Superintelligence means, AI systems vastly smarter than us, capable of things we couldn't even begin to understand. I'm not sure if you understand really the scope here - we're not talking about sci-fi. If the models we have now already made big discoveries in various fields, imagine an ASI which will give military advantage or unfold new ways of destruction. How long did it take to build an H-Bomb? What if ASI can in a week come up with something vastly greater than the H-Bomb?

I always use the same example for skeptics: if it's so safe, why do CBRN risk tests exist? As far as I know, it's not a conspiracy theory department. No, it's for possible risks at the intersection of AI and Chemical, Biological, Radiological, and Nuclear (CBRN) threats. So this needs to be taken seriously. But many still think that AI is only a next-word predictor.

Leopold Aschenbrenner wrote that "We will be faced with one of the most intense and volatile moments in human history," and he couldn't have used better words.

AI research

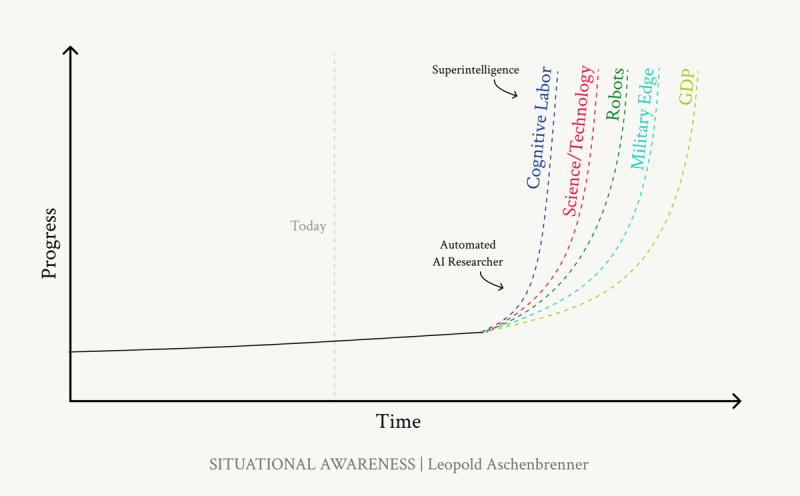

Those volatile times can be achieved by just automating AI research. They will be able to do a year's worth of work in a few days - that will be extraordinary, and scary.

Even though Leopold uses Alec Radford as an example, I will use someone more known to the general public. Imagine an automated Elon Musk. Imagine 100 million automated Elon Musks.

These "Elon Musks" would each have other enormous advantages over human researchers:

1 - They will be able to read every single document (ok, Machine Learning paper) ever written, knowing about every single previous experiment ever run at the lab and deeply think about it and its possible alternative outcomes, learn in parallel from each other's copies and quickly get the equivalent of a millennium of experience. No, no HF needed here.

2 - They will write state-of-the-art code, optimized and without the need for debugging.

3 - Efficiency: you will only have to train ONE automated AI researcher, and then make replicas.

4 - These AI researchers wouldn't just be insanely smart on their own—they'd also be perfect team players. They'd share everything: context, ideas, and even 'thoughts' (latent space, which is like the AI's internal way of organizing knowledge). No miscommunication, no egos, no wasted time.

5 - The inflation rate doesn't stop, so after those incredibly smart researchers, even smarter models will arrive.

Timeframe? From AGI to ASI we could be talking about less than a year.

Possible bottlenecks

The following items are some of the factors that may slow things down:

- Limited compute for experiments

- Human AI researchers: "if you can automate 70% of something, the remaining 30% will become your bottleneck" (a classic lesson from economics - cf Baumol's growth disease, I just learned about it)

- Ideas are harder to find; just because there is an increase in research doesn't mean it's enough to keep progress constant.

How sustainable is rapid progress?

The power of Superintelligence

Lovely topic, can't wait to dig into it in the following blog post when I will touch on alignment.

The AI systems we will have soon will be unimaginably powerful, obviously superhuman. I don't know why many still think that it's so difficult to achieve; most of humanity is dumb, not by lack of access to education, because today it's quite easy, but by lack of will. Only a minimum percentage of humanity is smart. How difficult is it to be superhuman? Quite easy.

ASI will master any domain, think way faster than Jordan Peterson, code complicated lines which will be impossible for humans to understand, have more experience than any earthling. Factories will be AI-directed using humans as physical labor before being fully replaced by robotics.(1) They will work without ever being sick or needing holidays.

Wait, what? Robots? The following text is too exquisite to be summarized, so I will quote Leopold's thought:

Robots

(1) A common objection to claims like those here is that, even if AI can do cognitive tasks, robotics is lagging way behind and so will be a brake on any real-world impacts.

I used to be sympathetic to this, but I’ve become convinced robots will not be a barrier. For years people claimed robots were a hardware problem—but robot hardware is well on its way to being solved.

Increasingly, it’s clear that robots are an ML algorithms problem. LLMs had a much easier way to bootstrap: you had an entire internet to pretrain on. There’s no similarly large dataset for robot actions, and so it requires more nifty approaches (e.g. using multimodal models as a base, then using synthetic data/simulation/clever RL) to train them.

There’s a ton of energy directed at solving this now. But even if we don’t solve it before AGI, our hundreds of millions of AGIs/superintelligences will make amazing AI researchers (as is the central argument of this piece!), and it seems very likely that they’ll figure out the ML to make amazing robots work.

As such, while it’s plausible that robots might cause a few years of delay (solving the ML problems, testing in the physical world in a way that is fundamentally slower than testing in simulation, ramping up initial robot production before the robots can build factories themselves, etc.)—I don’t think it’ll be more than that.

They will be able to overthrow the US government. In the early 1500s, just like Cortés and a few hundred Spaniards defeated the Aztec Empire using better tools and clever strategies, superintelligence might achieve overwhelming dominance not because it's god-like, but because it's operating on a completely different level of power - it's so much more advanced than us.

There is a real possibility that we will lose control, as we are forced to hand off trust to AI systems during this rapid transition. More generally, everything will just start happening incredibly fast. And the world will start going insane. Superintelligent AI systems will be running our military and economy.

The intelligence explosion and the immediate post-superintelligence period will be one of the most volatile, tense, dangerous, and wildest periods ever in human history. And by the end of the decade, we'll likely be in the midst of it.

We must once again confront the possibility of a chain reaction. Perhaps it sounds speculative to you. But among senior scientists at AI labs, many see a rapid intelligence explosion as strikingly plausible. They can see it. Superintelligence is possible.

The End